Apache Kafka Integration with Boomi: A Quick Setup Guide

Apache Kafka, renowned for its robust real-time data streaming capabilities, seamlessly integrates with Boomi’s platform through the Kafka Connector. This integration allows you to leverage Kafka’s reliable messaging and event-driven architecture for scalable data exchanges between applications, systems, and workflows. By configuring the Kafka Connector, Boomi users can set up a resilient messaging infrastructure that enables both producer and consumer processes to manage high data volumes efficiently. This guide will walk you through setting up Kafka connections, configuring producer and consumer processes, and ensuring smooth, real-time data flow within Boomi.

What is Kafka:

Kafka is a distributed streaming platform widely used for real-time data processing and messaging. In Boomi, a Kafka Connector is a critical integration component that facilitates seamless communication between Boomi’s platform and Kafka, enabling real-time data exchange, reliable messaging, and scalable, fault-tolerant integration workflows across diverse applications and systems.

Using Kafka in the Boomi Integration Process:

When integrating Kafka into the Boomi process, it’s essential to consider two crucial aspects:

- The Producer Process.

- The Consumer Process.

The producer process is responsible for publishing messages to Kafka topics, while the consumer process reads messages from Kafka topics. By focusing on these aspects, Boomi integration with Kafka can be designed to handle large volumes of data reliably and efficiently, providing a robust messaging system for data processing.

Creating Kafka Connection

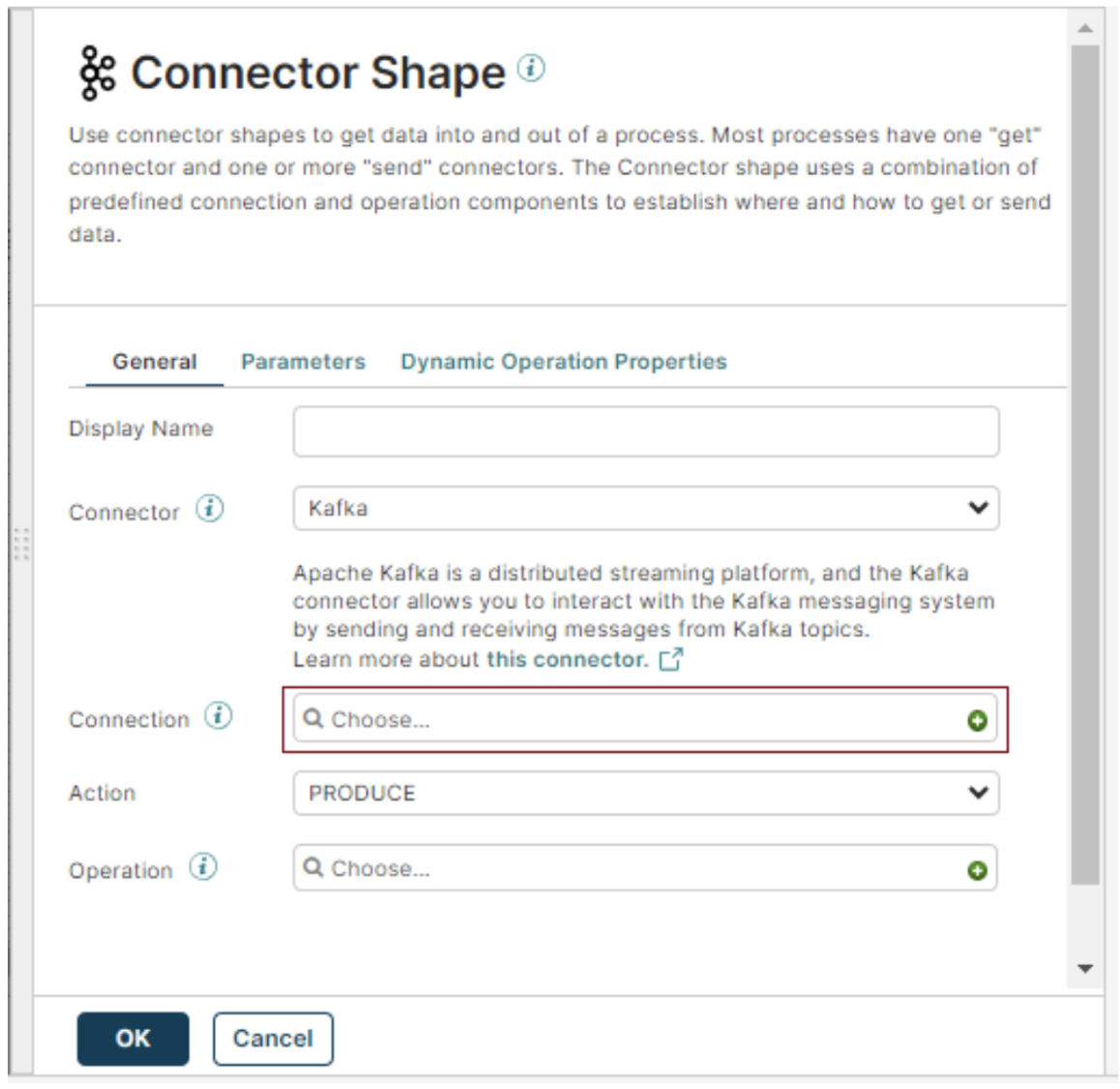

- Create a new Boomi process.

- Drag and drop the ‘Kafka’ connector into the process.

- Click on the plus icon next to the connection

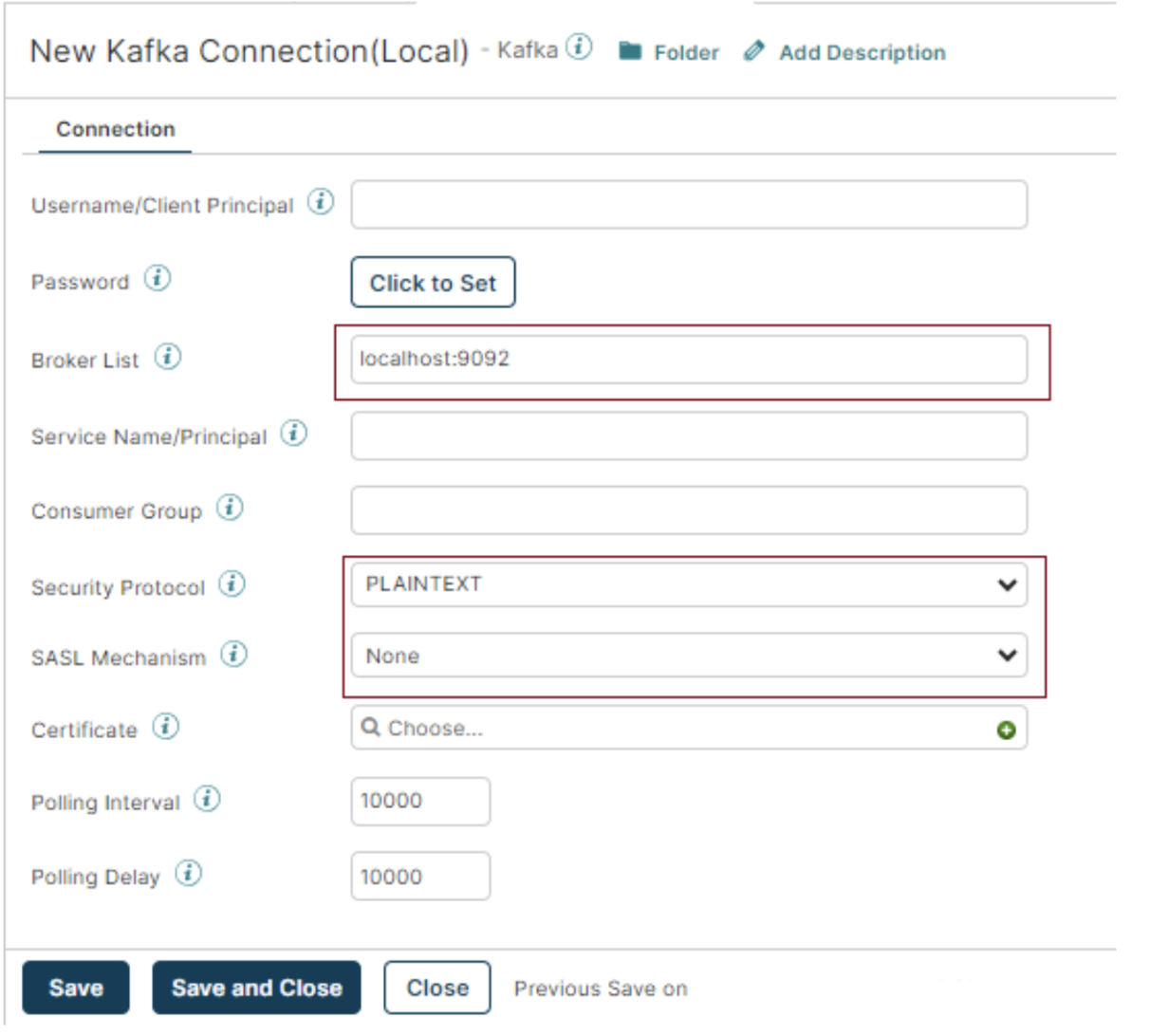

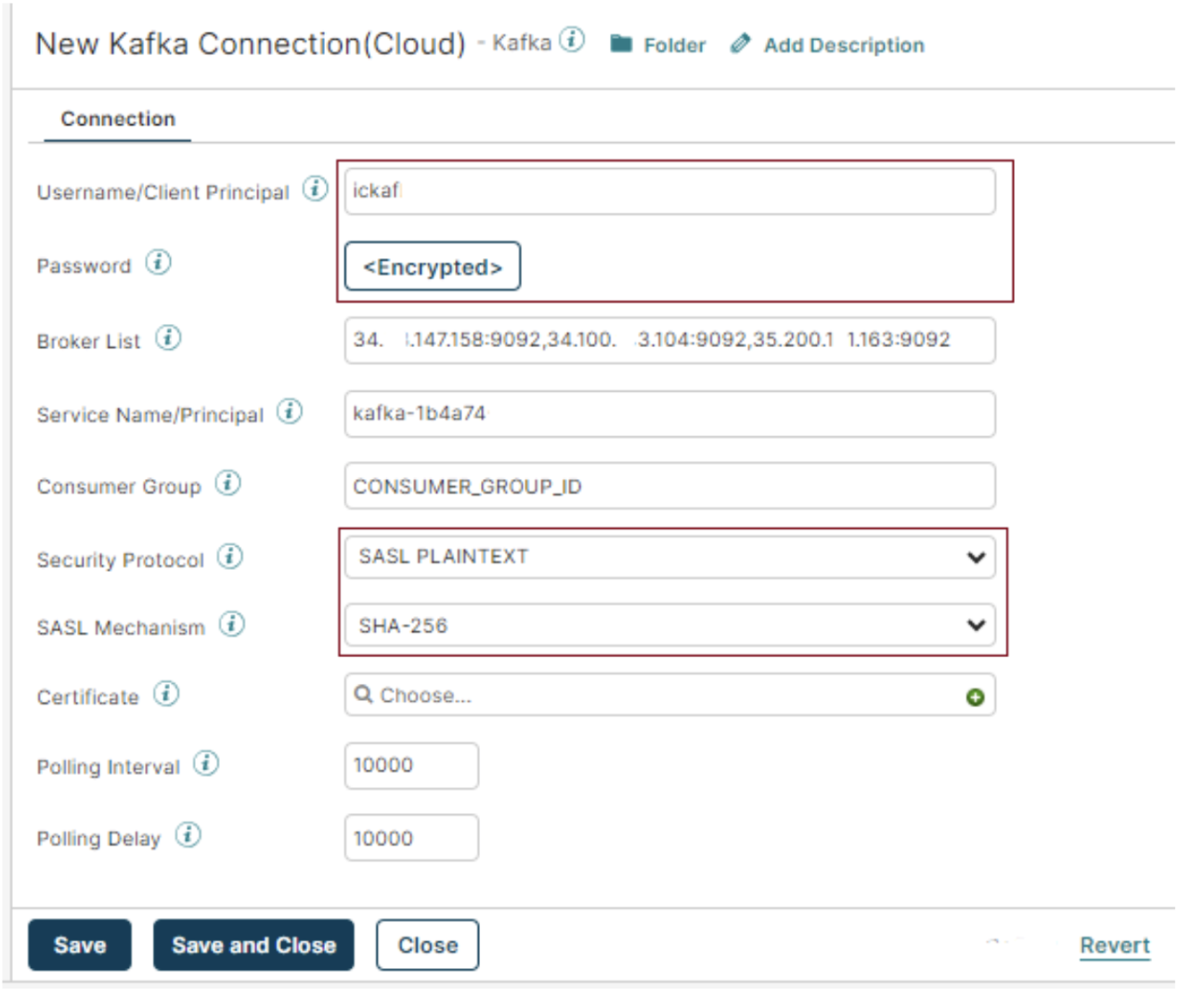

- Click the plus icon and create a new connection, enter Kafka server details, add the broker list, a combination of IP and Port (IP: Port) separated by commas, and test the connection. Provide the certificate, username, and Password if using security features (SASL and SSL) to enhance data protection and authentication

- If using the Kafka Cloud service then we need to provide the username and password along with that we need to provide the security protocol and SASL mechanism.

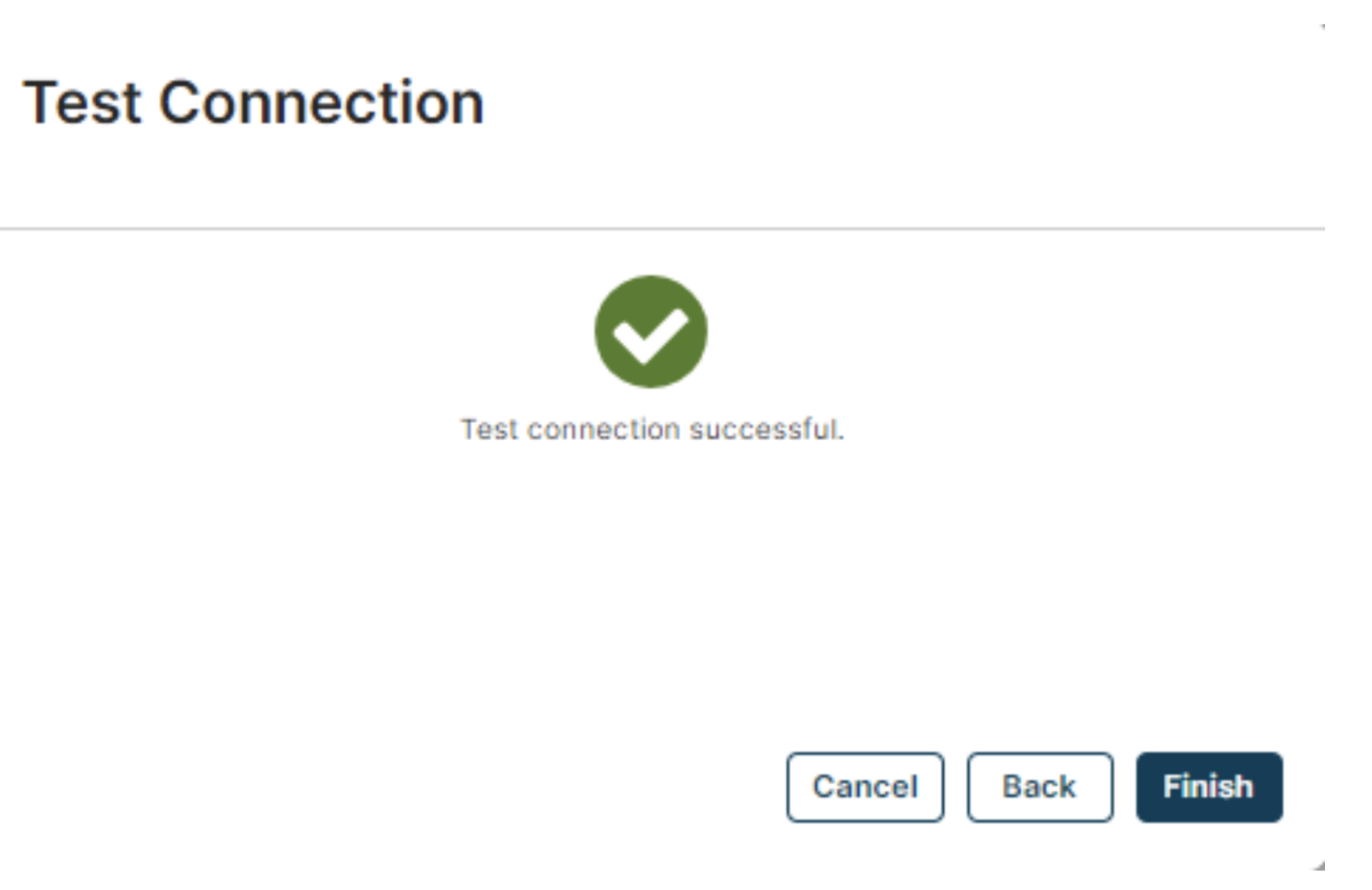

- Test the Connection with the local atom.

- Once the Test Connection is Successful, Save and close the Kafka Connection screen.

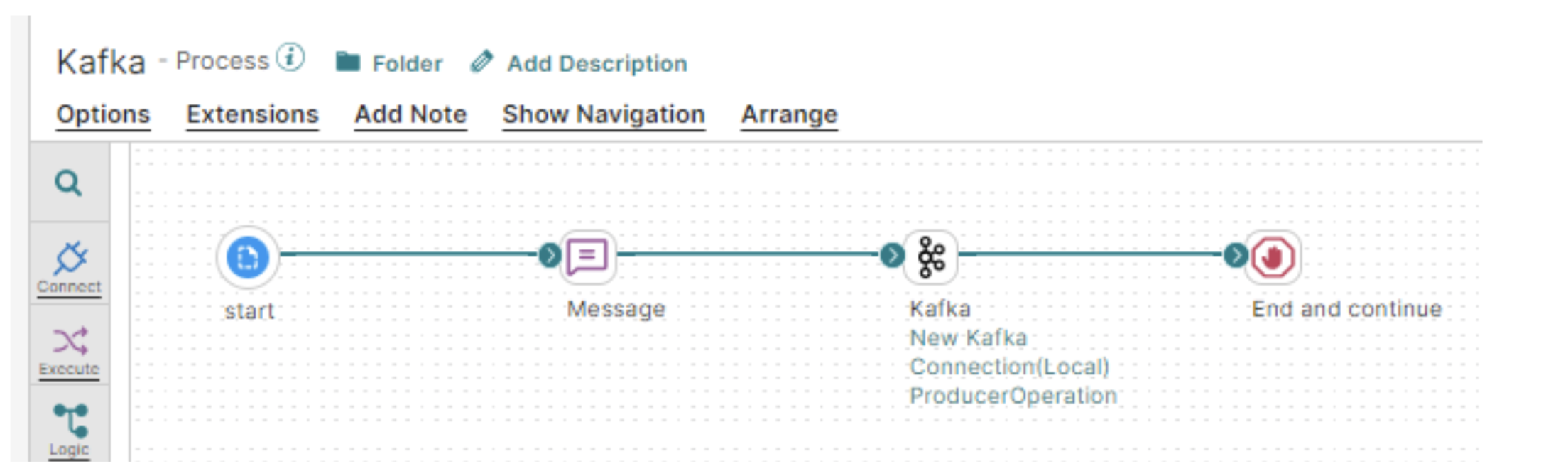

The Producer Process

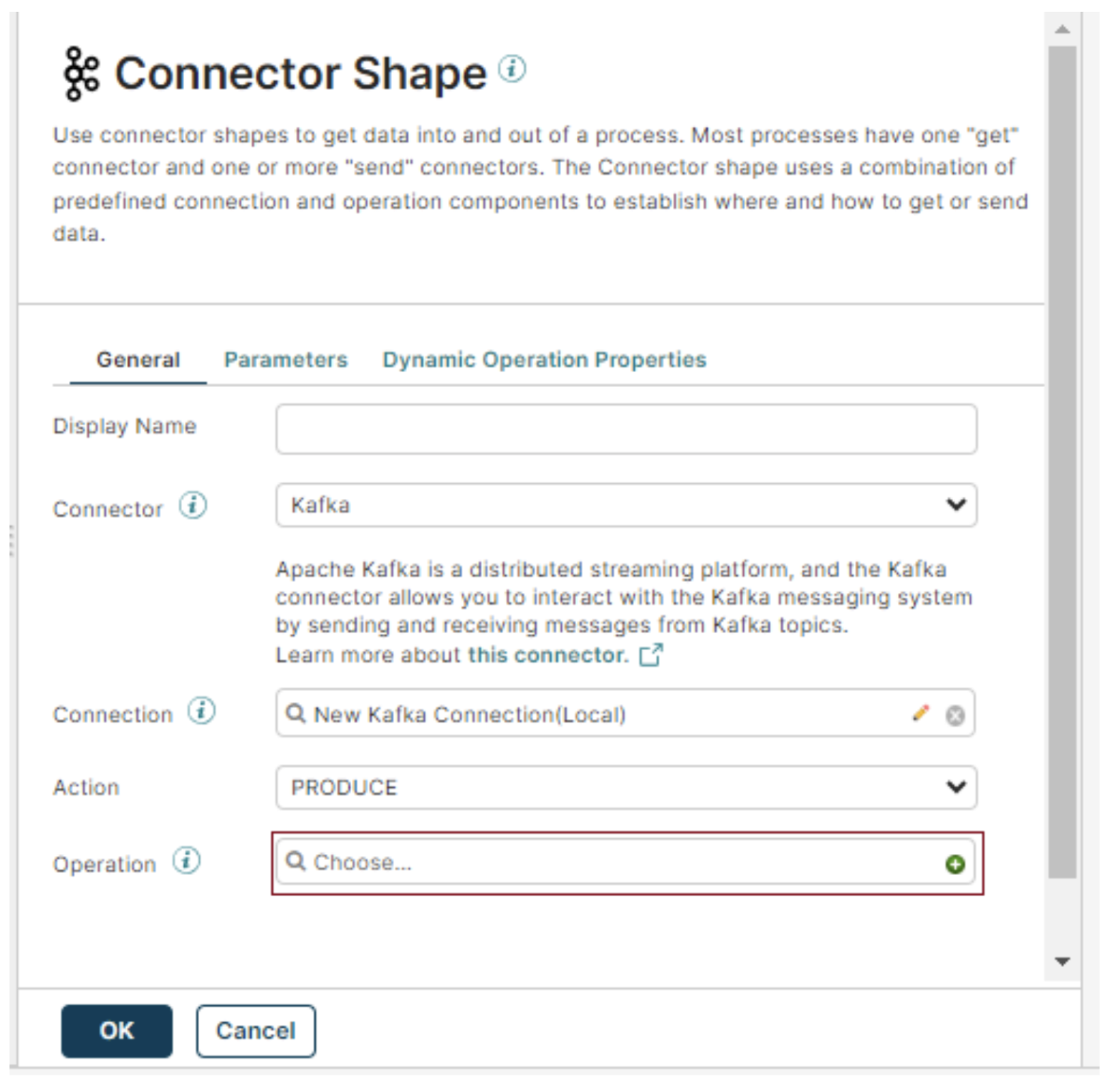

- Configure the Kafka Connector by selecting ‘Produce’ under Action.

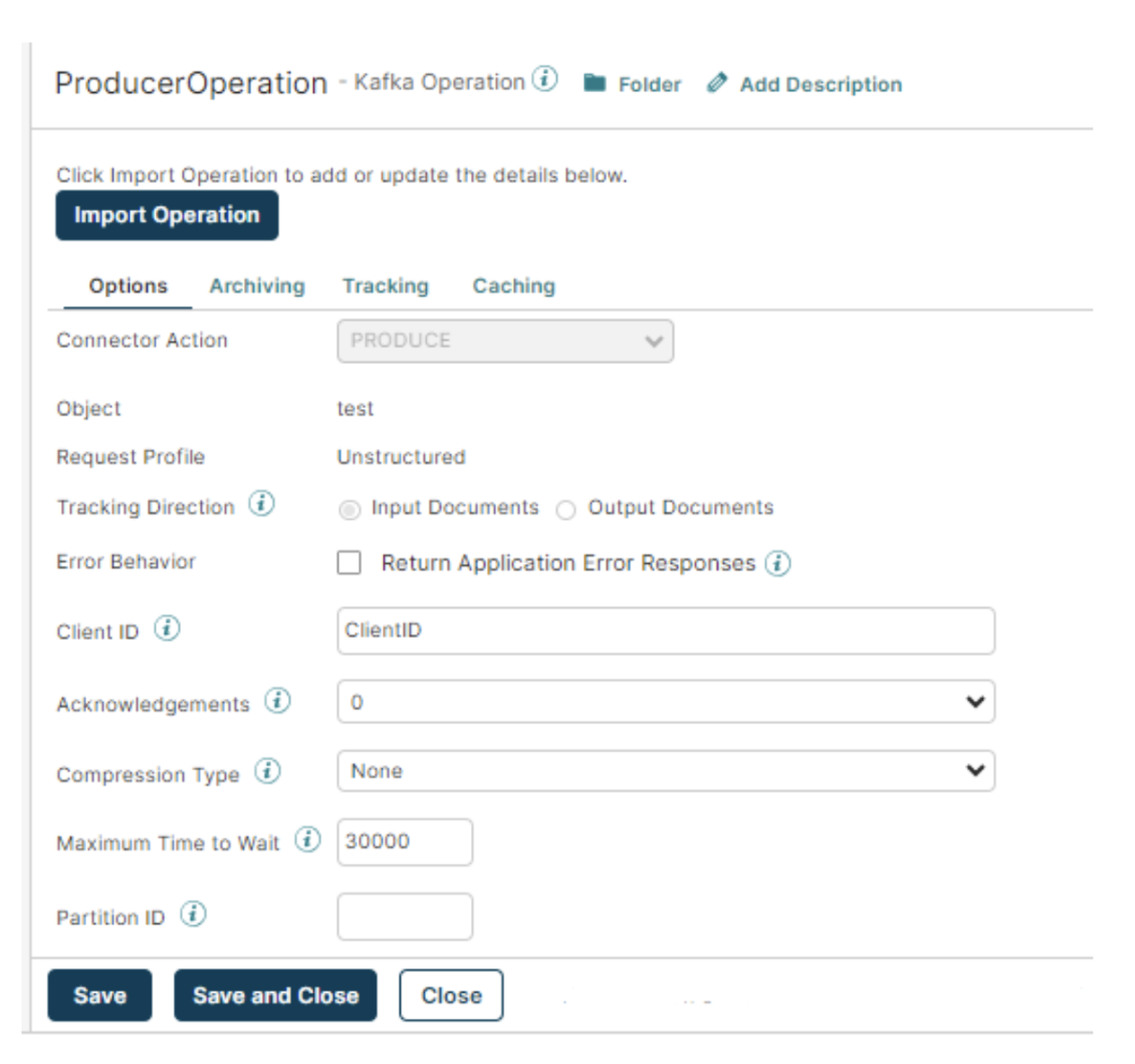

- Click on the plus icon next to Operation, import your topic (‘test’), and finish the configuration.

- Confirm settings including Client ID and Partition ID, and click ‘OK’ to complete the Kafka ‘Produce’ configuration.

- Complete the process by adding a stop shape at the end

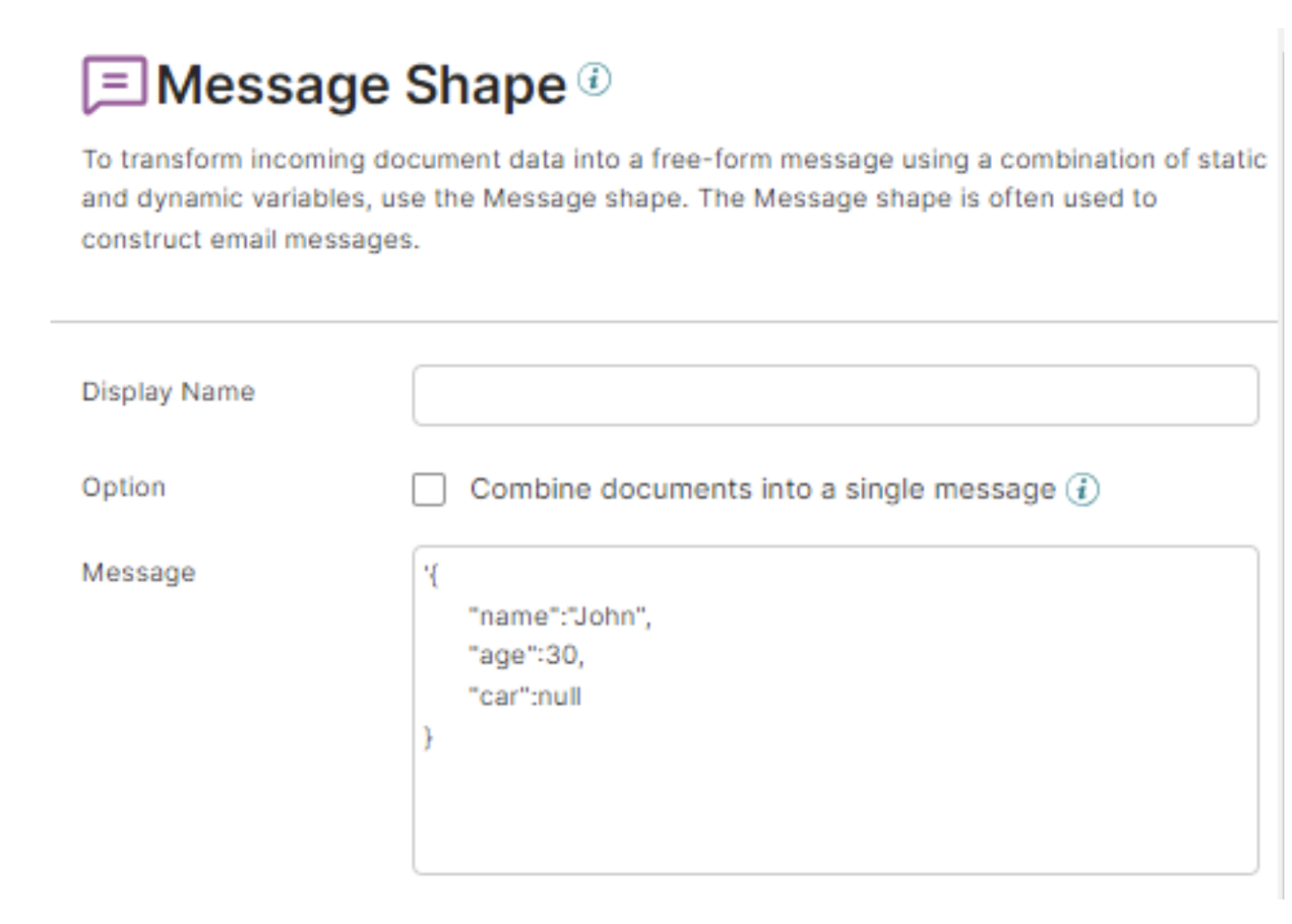

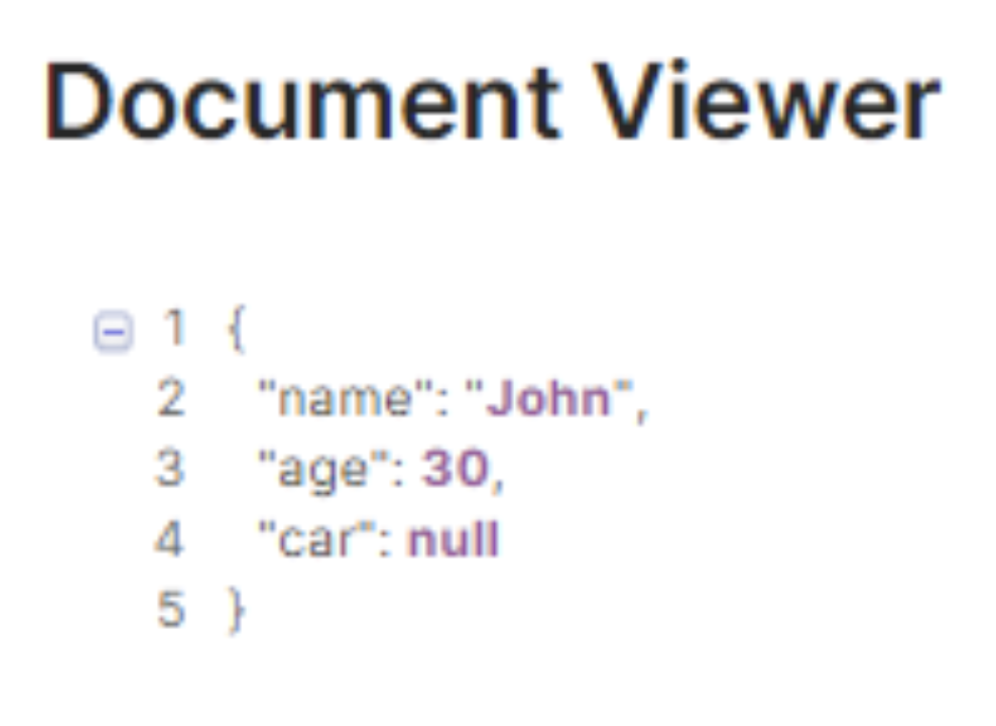

Data in the Message shape that is sent to Consumer Process:

The ‘Consume’ Process:

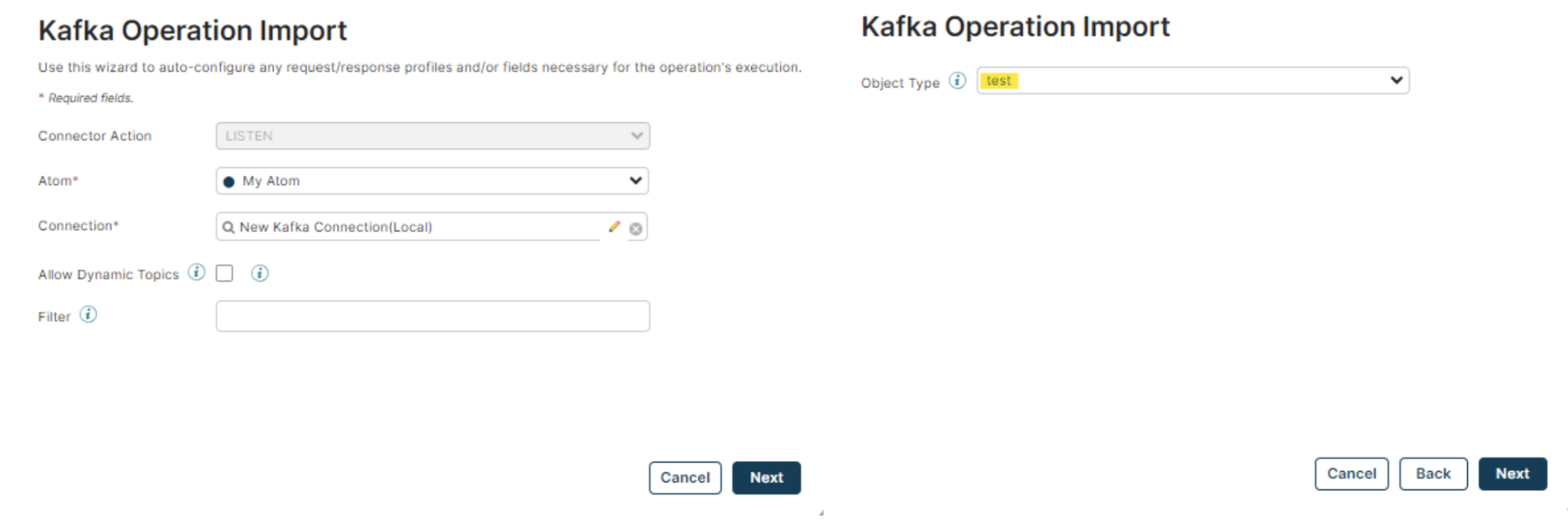

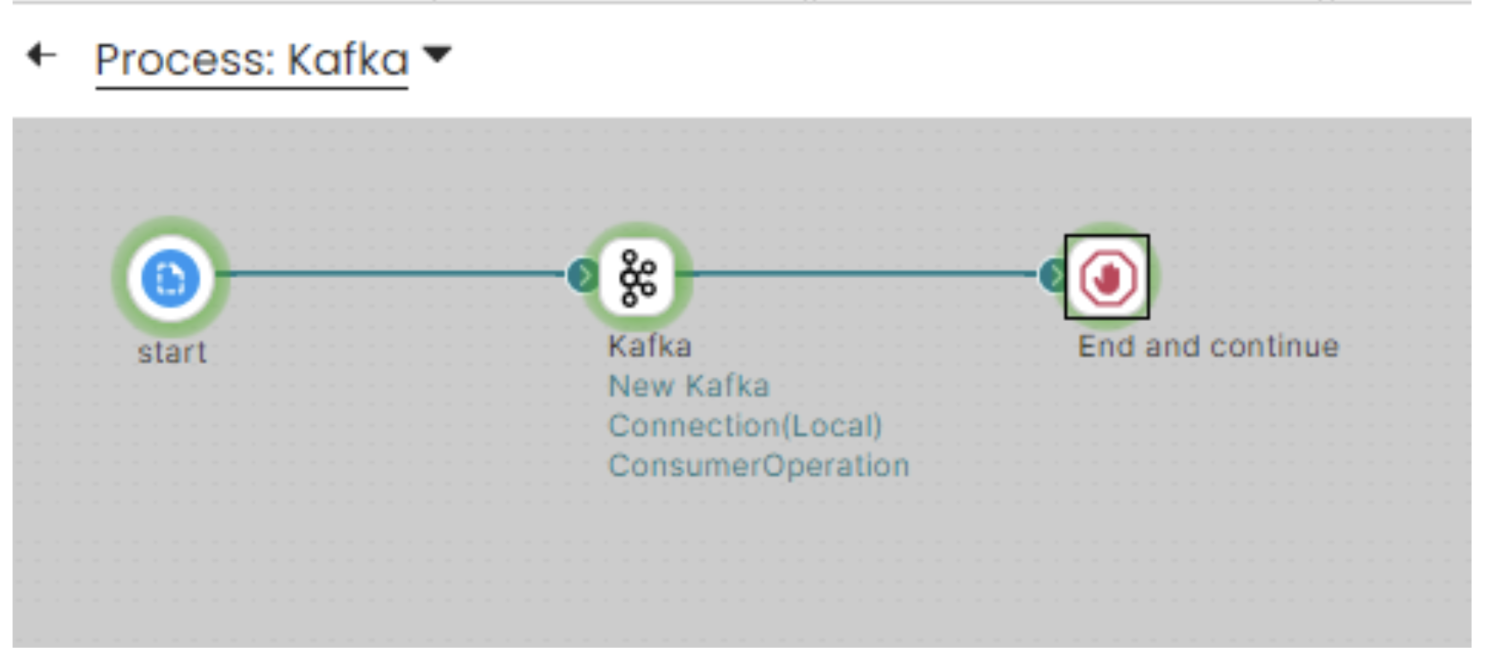

- In the ‘Consume’ process, configure the start shape by selecting ‘Kafka’ under Connector and ‘Listen’ under Action.

- Choose the previously created Kafka connection.

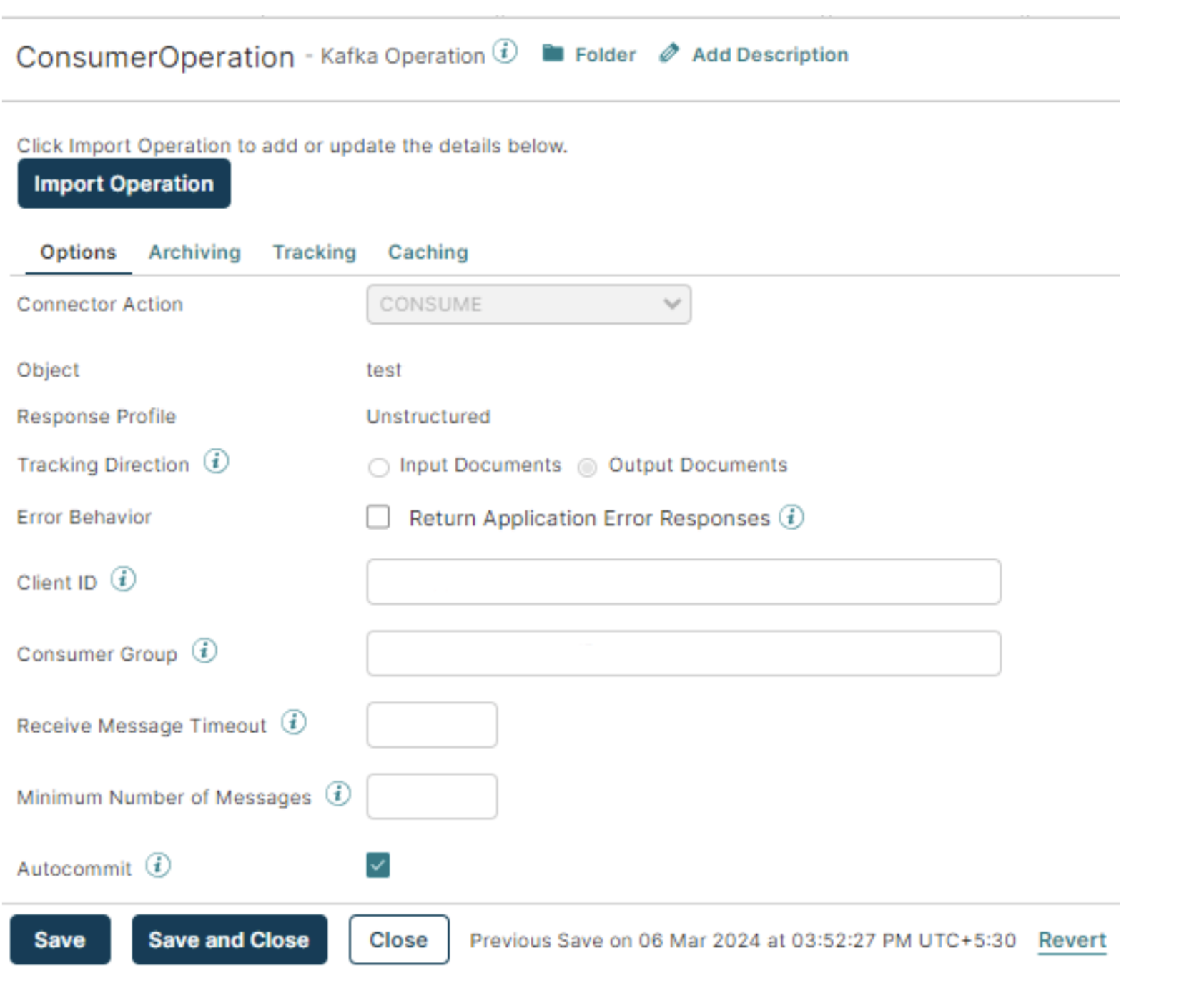

- Create an operation by importing your topic (‘test’).

- Confirm settings, including Client ID and Consumer Group, and click ‘OK’ to complete the Kafka ‘Consume’ configuration.

- We can also use the Kafka connection and action as the Listen to get the data from the Topic in the Real-Time.

- While using the Listen as action we need to configure the operation and deploy the process.

- Run the Producer process before the Consumer process

- To get the data from the Kafka Topic run the consumer process.

- We can see the Consumer received the message we sent from the Producer.

Integrating Apache Kafka with Boomi opens up powerful possibilities for real-time data streaming and scalable communication across your systems. By following these configuration steps, you’re well on your way to establishing a resilient and efficient messaging framework within your Boomi environment. Whether you’re handling high data volumes or enabling seamless, event-driven processes, the Kafka Connector is a vital tool for modern integration solutions.

Ready to dive deeper? Contact us today to explore more advanced integration techniques!